In the first part, we discussed why Artificial Intelligence and Machine Learning are at stake and tried to explain data poisoning in plain language.

Now, let’s dive a bit deeper into the subject matter.

Both AI and ML technologies detect patterns and analyze certain user behavior. In the process, the technology detects strange behavior and takes measures to block that before these become a threat or problem.

For example, ML algorithms can track a typical workday/hours of an employee or rhythmic pattern of their keystrokes or mouse movement. If anything goes awry it sets up alerts to the appropriate authority.

Admittedly, it’s not 100% perfect because someone could work outside of their normal work hour to meet a strict deadline or someone’s keystroke pattern may change due to an injury. However, ML tools are designed to catch something out of the ordinary (that humans possibly cannot), such as threat actors using stolen credentials.

On the other hand, AI technology is better suited to protect against ransomware attacks that infect organization’s networks. AI can detect such attacks by identifying malicious files on unsupervised computers or networks. AI can also detect shadow IT and give insight into the number of endpoints organizations usually use.

How attackers use data poisoning

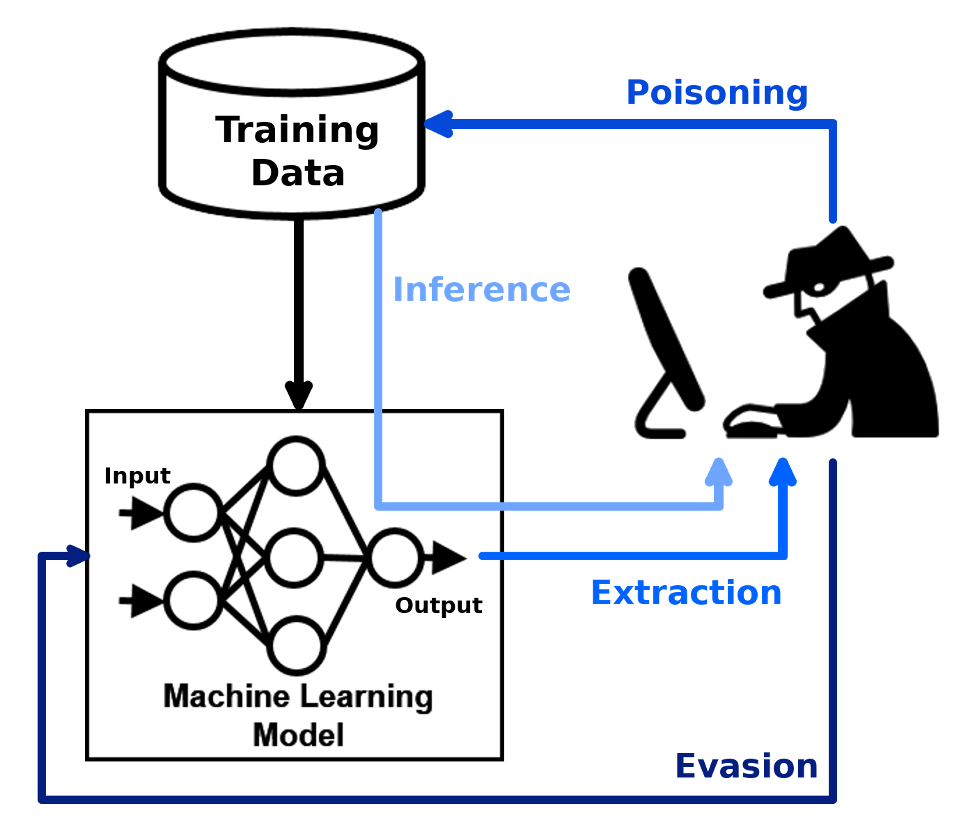

We already know that there are a couple of ways of data poisoning.

One vital factor to note down here is that the threat actor needs access to the data training program. So, you can be sure that you are dealing with an insider attack or a business rival who knows your business.

The US Department of Defense is always looking at how to find a way to best defend its networks and data from data poisoning.

“Current research on adversarial AI focuses on approaches where imperceptible perturbations to ML inputs could deceive an ML classifier, altering its response,” Dr. Bruce Draper wrote about a DARPA research project, Guaranteeing AI Robustness Against Deception. “Although the field of adversarial AI is relatively young, dozens of attacks and defenses have already been proposed, and at present, a comprehensive theoretical understanding of ML vulnerabilities is lacking.”

Another way of data poisoning is by making malware smarter. Attackers use such malware to compromise email by cloning phrases to outsmart the coded algorithm. This is now stretching into biometrics where threat actors block legitimate users and sneak in.

Data poisoning & Deepfakes: Are they related?

Deepfakes are just a variant of data poisoning and it is expected to be the next big wave of digital crime. Videos, images, and voice recordings are altered in such a way that general humans can’t identify them.

Attackers have already started using this technique for blackmailing, harassment, or corporate espionage.

Fake news also falls under the category of data poisoning. Popular social media algorithms are either technically weak or corrupted to identify fake news. More often than not we see the rise of incorrect information on a user’s news feed, replacing authentic news.

How to stop data poisoning?

Frankly, cyber defense experts are still learning how to defend against data poison attacks in the best possible way. Pentesting and Offensive security testing may lead to vulnerabilities as they pave the way for outsiders to gain access to training models.

Some cybersecurity experts have also suggested a second layer of AI and ML algorithms to pinpoint errors in data training. The irony is we still need humans to check and test these algorithms!

Constant and real-time privilege escalation monitoring is crucial to help mitigate such attacks.

714-333-9620

714-333-9620