Organizations are fearful of ransomware and to avoid that risks many business organizations rely on AI and ML as their defense mechanism. However, in a continuously evolving threat landscape, attackers always find a way to harm you. Data poisoning is one such attack method where hackers launch the attack through AI and ML.

Why AI and ML are at risk

Like any other tech, AI is a two-sided coin. Every AI model processes lots of data before coming up with a "best guess".

“Hackers have used AI to attack authentication and identity validation, including voice and visualization hacking attempts,” says Garret Grajek, CEO of YouAttest. This ‘weaponized AI’ works as a key vector for attack.

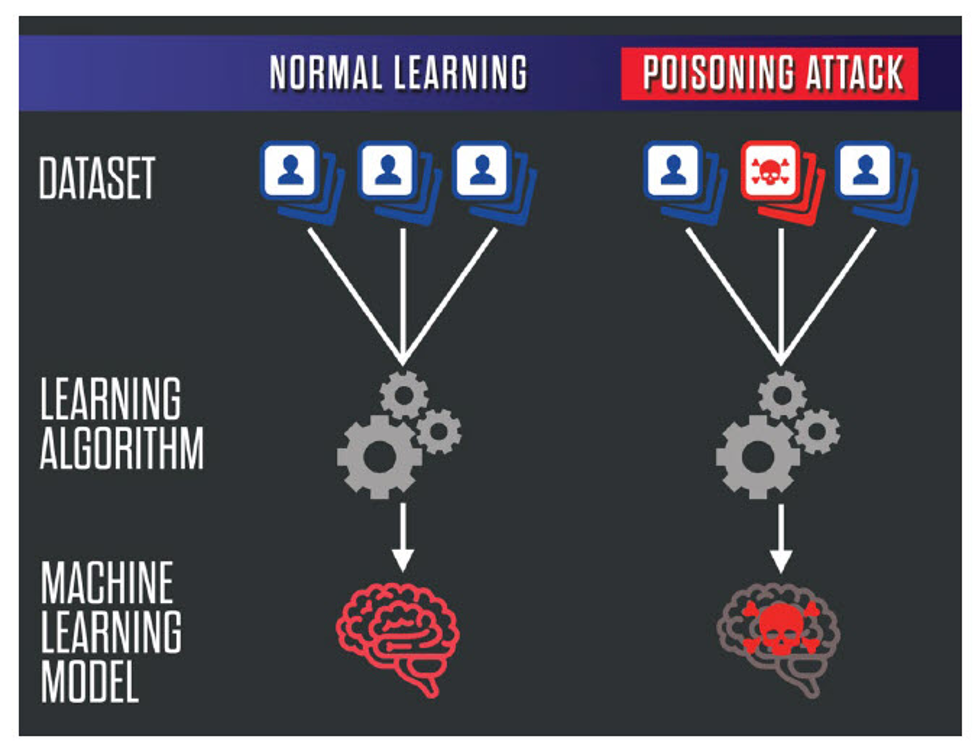

“Adversarial data poisoning is an effective attack against machine learning and threatens model integrity by introducing poisoned data into the training dataset,” researchers from Cornell University explain.

So, what makes attacks through AI and ML different from typical ‘bug in the system’ attacks?

There are inherent limits and weaknesses in the algorithms that can’t be fixed, says Marcus Comiter in a paper for Harvard University’s Belfer Center for Science and International Affairs.

So, it is clear that AI & ML techniques heavily rely on data & certain algorithms to fight cyber threats. That’s what allows them to identify the problems efficiently. On the flip side, this is a real threat as attackers can poison the data. The rise of AI and ML is leading directly to the sleeper threat of data poisoning.

Impact of human error

We already know that threat actors use AI and ML as attack vectors but how they do it? Let’s have a clear picture of the role they play.

Any CISO of an organization will admit that the greatest threat to an organization’s data is human nature. No, employees don’t intend to be a cyber risk but they are human after all and humans are distractible.

They miss a threat today that they would easily identify yesterday or tomorrow. Someone rushing to meet a deadline or searching for an important document may end up clicking on an infected attachment unintentionally.

Attackers know this and they always look for such an easy entry to the network and data—phishing no matter how outdated it sounds is still a very effective attack vector.

Data Poisoning: Brief explanation

There are 2 ways of data poisoning.

One is to inject junk information into the system that results in ‘Garbage-in, Garbage-out’.

It doesn’t seem extremely difficult to poison the algorithm as AI & ML only know what humans teach them. Imagine, you are teaching an algorithm to identify goats. In the process, you show it hundreds of pictures (in different positions) of black goats. At the same time, you are teaching the algorithm to identify dogs by showing pictures of white dogs.

Now, when a black dog slips into the data set the algorithm will identify it as a goat. To the algorithm, the black color animal is a goat. Only humans could tell the difference but the machine won’t be able to do it unless you tell the algorithm that dogs can be black too.

Likewise, if threat actors can get access to training data, they can manipulate your AI and ML setup to treat good software data as malicious ones.

The second way threat actors could take advantage of the training data is by generating a back door.

“Hackers may use AI to help choose which is the most likely vulnerability worth exploiting. Thus, malware can be placed in enterprises where the malware itself decides upon the time of the attack and which the best attack vector could be. These attacks, which are, by design, variable, make it harder and longer to detect.” says Grajek.

Be sure to read the second part as we have discussed how attackers use AI and ML for data poisoning in more detail and how you can safeguard the organization!

714-333-9620

714-333-9620